I’m sure you all feel it. Conversations about AI seem inescapable these days. Maybe you’re just joining the conversation or maybe you’re like Dr. Safiya Noble and Dr. Ruha Benjamin and you’ve been thinking about it for a long time. Last week I read another article authored by Eric Schmidt warning us about the potential harm of AI followed by some proposed solutions. My eyes roll like an inevitability of physics as I think about this former Google CEO performing this posture of care and benefitting from the risky whistle blowing black feminists were doing while he was/is still in bed with Google. I have to pull out all my practices to stop my blood from boiling just thinking about what happened to Timnit Gebru when she shorted the circuits at Google or when I think about the gaslighting that Dr. Safiya Noble powered through while she was developing her dissertation. In Safiya Noble Knew the Algorithm Was Oppressive Michelle Ruiz writes:

“Noble was met with resistance when she first wrote the dissertation that became Algorithms of Oppression as a Ph.D. student at the University of Illinois at Urbana-Champaign in 2012. “There were faculty along the way who said things like, ‘This research isn’t real. It’s impossible for algorithms to discriminate because algorithms are just math and math can’t be racist,’” she remembers. Noble pushed back (“they were value systems that were getting encoded mathematically”), accepting every invite she received to talk about her work and naming every one of those talks “Algorithms of Oppression,” branding her message and, in a meta twist, making it googleable.”

While Dr. Safiya Noble was fighting for the validity of her research and cultivating cultural concern, Eric Schmidt was the Chief Executive Officer at Google and later became the executive chairman. Due to the commitment to care rooted in the research of Dr. Ruha Benjamin, Dr. Safiya Noble, Timnit Gebru, and many, many others, powerful white men like Eric get to perform “thought leadership” in a cultural and media landscape where concern around AI and algorithmic bias is normalized thanks to the work black feminists cared enough to share while simultaneously being attacked. I bring my blood to a simmer because the boil is unsustainable. This is just how it goes. The laboratory of racial capitalism presents some “innovation”, black feminists do the care work of researching and calling out the possibilities of harm while risking their careers and mental health, then years later some white guy re-presents a watered down version of the black feminist research and authors the articles, writes the books, and may even start a new company, collecting the harvest from the seed of black feminist sacrifice, risk and care.

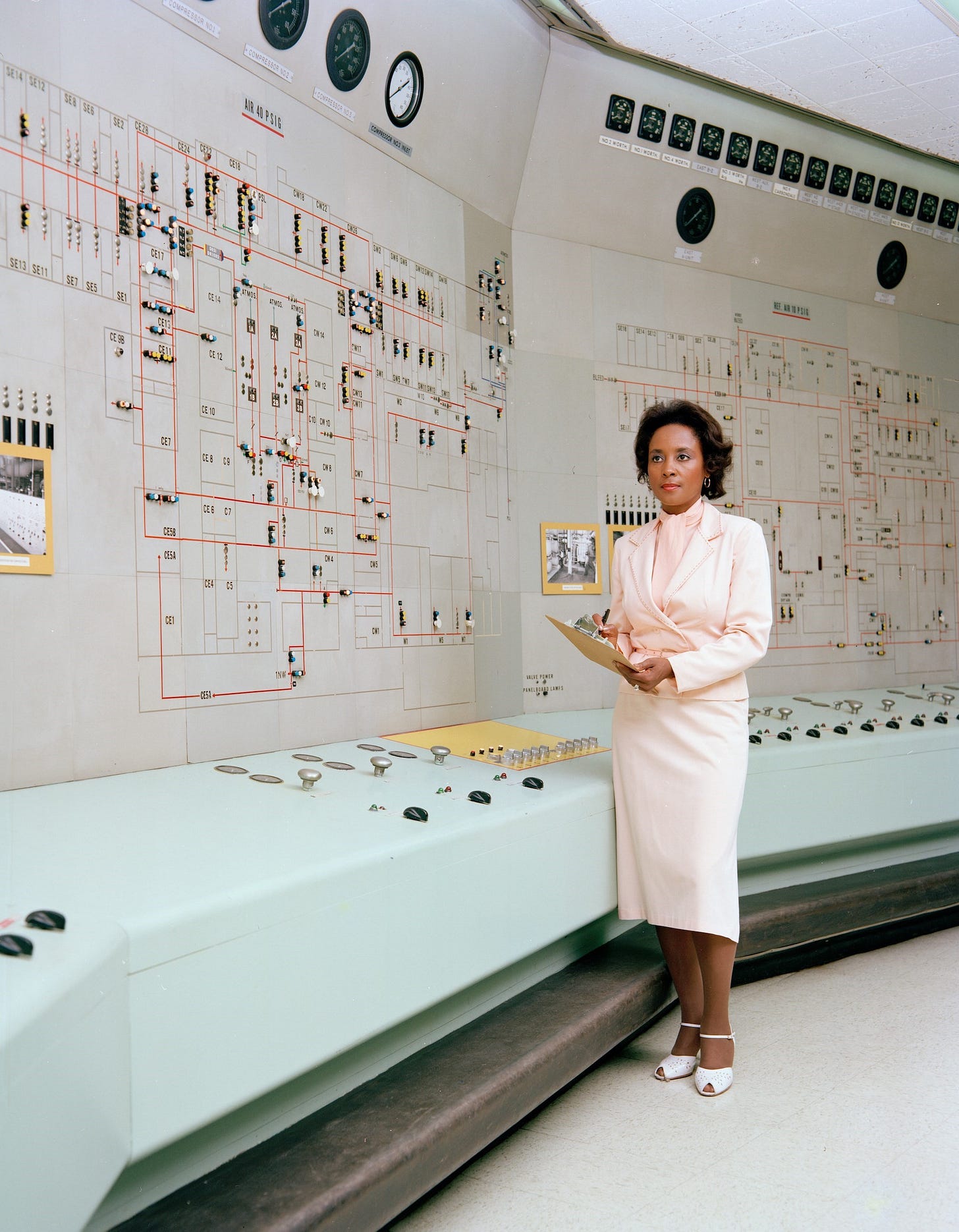

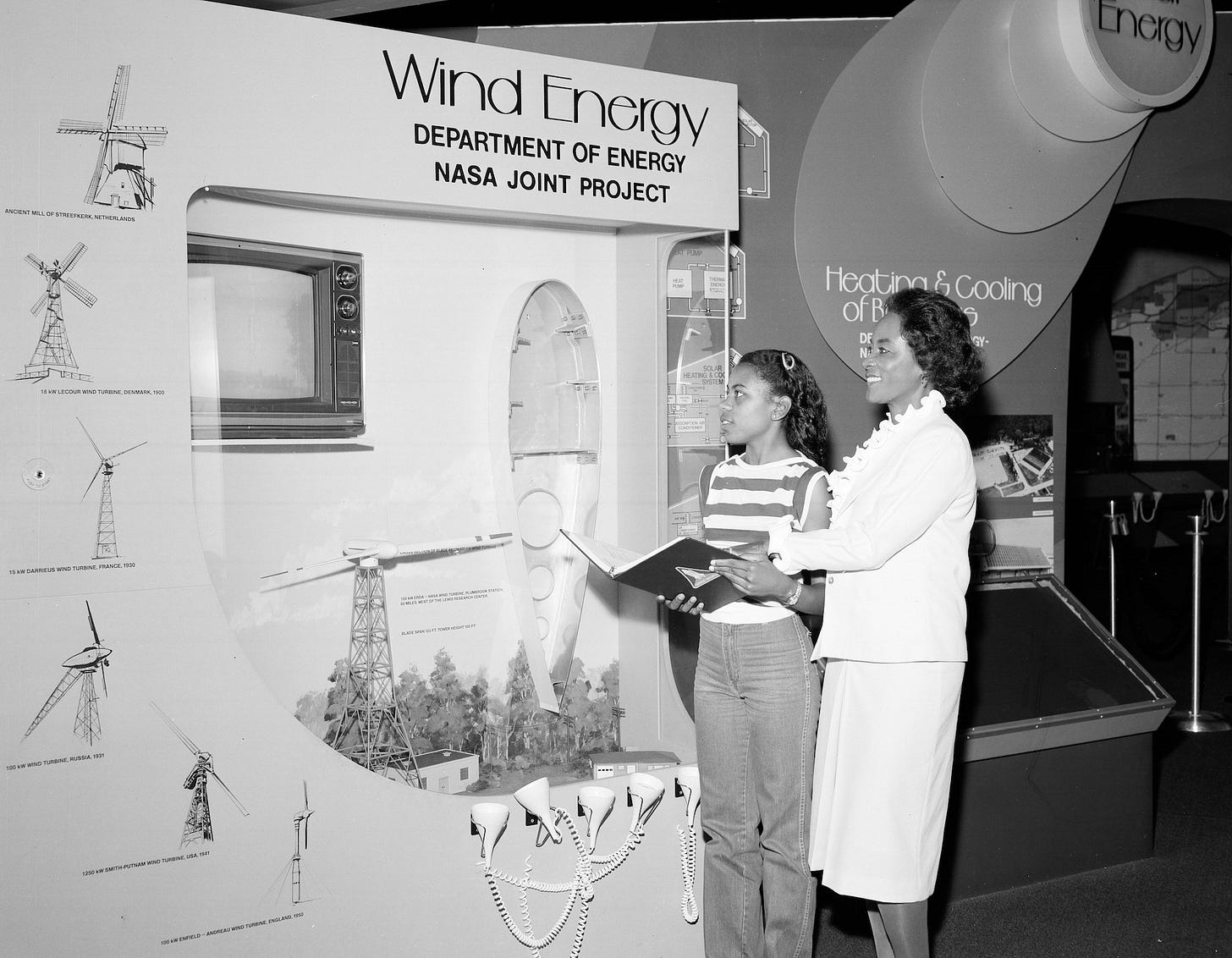

So “what time is it on the clock of the world?”1 I'm trusting black feminists to tell it because no one else has to watch the clock more closely. Human computers constantly telling the time and bracing us for inevitable futures.

What if we replaced our current “dominant aesthetic of innovation”2 with black feminist technologies of care?

What do we build if we start with black feminism as the framework for software development?

Is the infinite elasticity of black feminist care work the band holding it all together? (Hint: yes)

I drift off to sleep and dream about snapping then wake up the next day and get back to work. It’s Tuesday morning and the sun has barely kissed this corner of the earth, the Red-winged Blackbird singing outside my window reminds me. My flight boards at 3:33pm headed to Denver, Colorado for a week-long workshop where the participants and I will create a “post-future punk catalog of multispecies response-abilities”. A week-long workshop reflecting on our power and positionality as it relates to bioengineering research and development. The irony of showing up to a workshop for imagining responsible bioengineering wearing leather Margiela's and having left a sizable trail of CO2 emissions to get there is an awkward reality I’ll worry about later. For now, I’m thinking about all the energy expensive research and “human computing” indigenous and black feminist folks are doing around the inevitable harm of biotechnology and biopower. For now, I’m thinking about the inevitable white folks that will show up with solutions to problems they created. Where are we on the clock of synbio, biotechnology, bioengineering, etc? I’m not sure but I rest knowing black feminism is always right on time.

In 1974 Grace Lee Boggs and James Boggs asked, “What time is it on the clock of the world?” in their book Revolution and Evolution in the Twentieth Century.

“Whether or not design-speak sets out to colonize human activity, it is enacting a monopoly over creative thought and praxis. Maybe what we must demand is not liberatory designs but just plain old liberation. Too retro, perhaps? And that is part of the issue — by adding “design” to our vision of social change we rebrand it, upgrading social change from “mere” liberation to something out of the box, “disrupting” the status quo. But why? As Vinsel queries, “would Design Thinking have helped Rosa Parks ‘design’ the Montgomery Bus Boycott?” It is not simply that design thinking wrongly claims newness, but in doing so it erases the insights and agency of those who are discounted because they are not designers, capitalizing on the demand for novelty across numerous fields of action and coaxing everyone who dons the cloak of design into being seen and heard through the dominant aesthetic of innovation.” — Ruha Benjamin, Race After Technology: Abolitionist Tools for the New Jim Code (2019)